Emergency rooms are the front lines of critical care, where every second matters. A fast, accurate diagnosis can mean the difference between life and death, but radiology departments are under growing pressure.

Overcrowded hospitals, a rising number of imaging scans, and a global shortage of radiologists have created a bottleneck in emergency care. This is where AI-powered radiology makes a difference—enhancing efficiency, accuracy, and patient outcomes.

The Growing Burden on Emergency Radiology

✅ More emergency visits than ever before

✅ An aging population with complex conditions

✅ A rise in patients with multiple comorbidities

Radiologists must review thousands of images daily, often under intense time constraints. Missed findings and delayed diagnoses can have devastating consequences.

AI: A Game-Changer for Emergency Radiology

AI instantly detects urgent cases, prioritizing the most critical patients for faster intervention.

🚀 How AI Enhances Emergency Workflow:

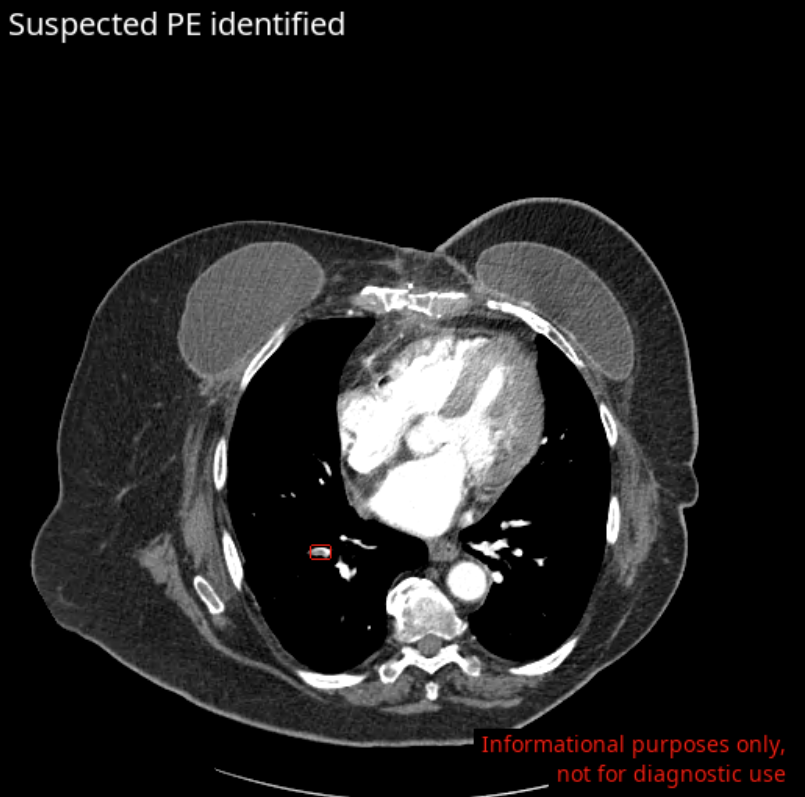

✔ Automates triage—flags life-threatening conditions (e.g., stroke, pulmonary embolism, traumatic fractures) in seconds

✔ Accelerates scan interpretation, minimizing diagnostic delays

With AI, radiologists can work smarter and faster, ensuring the most urgent cases receive immediate attention.

Reducing Radiologist Burnout & Improving Well-Being

The growing workload in emergency radiology is leading to high burnout rates, especially for on-call radiologists who face constant interruptions.

💡 How AI Supports Radiologist Well-Being:

✅ Reduces unnecessary nighttime interruptions for on-call doctors

✅ Creates a better work-life balance by reducing fatigue and stress

By acting as a first reader, AI minimizes disruptions, allowing radiologists to perform at their best while maintaining a healthier work-home balance.

Boosting Diagnostic Accuracy & Speed

Errors in emergency radiology can be fatal. AI acts as a second set of eyes, detecting subtle abnormalities that might be missed due to fatigue or lack of experience.

🔍 AI Enhances Radiologist Confidence:

✅ Reduces misdiagnoses and overlooked findings

✅ Boosts accuracy in high-risk cases

The result? Better care, fewer complications, and improved patient outcomes.

AI-Driven Quantification for Smarter Treatment Decisions

AI doesn’t just detect abnormalities—it can quantify them.

📊 Why AI-Powered Quantification Matters:

✔ Accurate severity assessment ensures timely and appropriate treatment decisions

✔ Objective measurements help guide intervention strategies

By providing precise, real-time data, AI enables faster, more effective treatment planning, ultimately improving patient outcomes and quality of care.

Addressing the Radiologist Shortage with AI

With a global shortage of radiologists, AI serves as a trusted assistant, helping radiology teams:

💡 Automate repetitive tasks, reducing fatigue and burnout

💡 Ensure high-quality care, even with limited staffing

By augmenting radiologists’ capabilities, AI ensures faster and more effective emergency care despite workforce challenges.

AI’s Financial & Operational Benefits for Hospitals

AI improves patient care while reducing costs for hospitals.

🏥 How AI Optimizes Hospital Operations:

💰 Shortens hospital stays with faster diagnoses

💰 Minimizes unnecessary admissions by refining triage accuracy

💰 Lowers legal risks by preventing diagnostic errors

By improving workflow efficiency and reducing misdiagnoses, AI helps hospitals save time, cut costs, and enhance patient care.

Overcoming Barriers to AI Adoption

Despite its benefits, AI integration requires careful planning for seamless adoption.

✅ Compatibility with PACS/RIS systems

✅ Seamless integration into existing hospital workflows

✅ Regulatory compliance and AI transparency

AI is not a replacement for radiologists—it’s an indispensable tool that enhances their expertise while preserving human judgment.

AI + Radiology: The Future of Faster, Smarter Emergency Care

AI is revolutionizing emergency radiology, helping hospitals save lives, reduce errors, and improve efficiency. In an environment where every second counts, AI isn’t just an innovation—it’s a necessity.

Discover how Avicenna.AI’s comprehensive portfolio—featuring tools like CINA-ICH (intracranial hemorrhage), CINA-LVO (large vessel occlusion), CINA-ASPECTS (early ischemic stroke signs), CINA-AD (aortic dissection), CINA-PE (pulmonary embolism), and CINA-CSpine (cervical spine fractures)—is transforming emergency care worldwide.

🚀 Ready to transform your emergency radiology workflow? Request a meeting today.